Episode 02: The Language of Computers

Listen directly on this page or visit the links in the top right to listen on your favorite podcast provider, Apple Podcasts, Spotify, or Stitcher.

Billions of lines of code running our phones wake us up, serve us the morning news, and show us our friends’ stories. This code runs our computers, which let us call into video conferences and respond to emails. We run our businesses, talk to people, and discover new ideas thanks to these billions of lines of code. The technology is used by everyone around the world, but this code is composed almost exclusively of English codewords. In today’s episode, we’ll explore why English is the language of technology and how this impacts the rest of the non-English-speaking world.

Source: Railly News (link)

Part 1: History of computing (the abacus)

Today’s story starts with the advent of computers. Language is what makes humans unique from other forms of life, and language enables us to share ideas and be creative. As different civilizations sprouted around the world, different languages formed, and over 7,000 languages exist today. On the internet, however, English accounts for around 60% of all text on websites, and only a few hundred languages are available at all.

Source: History Computer (link)

One of the first devices that humans invented to aid calculations was available in a universally understood language -- math. Prehistoric humans used counting sticks, and in the Fertile Crescent people used clay spheres and cones to represent counts of items, such as livestock or grains. Later, more sophisticated methods to perform calculations were invented independently around the world. There was a variety of early abacuses from Mesopotamia, Egypt, Persia, and Greece, to name a few. Rome has records of using counting tools since 2400 BCE, and the first archaeological evidence of an abacus dates back to the 1st century CE. The word abacus comes from Latin and Greek.

China potentially started using abacuses in the 2nd century BCE. They called them suan pan, or calculation tray. The Tang dynasty likely imported the concept of zero from India in the 7th or 8th century, which sped up the advancement of mathematics. By the 3rd century, there are records of abacuses in India.

Subsequently, for hundreds of years, each of these civilizations were leaders in math, science, and technology. They developed many concepts independently and shared ideas through trade routes. Their languages, such as Latin, Greek, and Mandarin, also each had their own terminology for key concepts. Actually the emergence of English as the leading language of science and technology is a fairly recent one. Let’s take a look at how this came to be.

Part 2: Computers in the West (Europe and US)

The word "computer" comes from the Latin words putare which means ‘‘to think’ and computare which means ‘to calculate’. Historians don’t agree on the first use of the word ‘computer’ in English, however, they do agree that the word ‘computer’ almost always was used to describe a person or occupation.

The first use of the word “computer” came from one of these two sources. In 1613, the word “computer” was allegedly used to describe a person who performed calculations, by Richard Braithwait, an English writer. Subsequently, in the 1640s, a computer described a person who "calculates, a reckoner, one whose occupation is to make arithmetical calculations," used by Thomas Browne, an English mathematician.

Besides the abacus, modern computing in the West can be traced back to the invention of the Slide Rule. The slide rule is an example of an analog computer that could perform complex calculations, such as multiplication, division, exponents, roots, and trigonometry, through the clever use of logarithms. The Reverend William Oughtred, an English mathematician, and others developed the slide rule in the 17th century based on the emerging work on logarithms by John Napier, a Scottish mathematician. Before the advent of the electronic calculator, it was the most commonly used calculation tool in science and engineering. The use of slide rules continued to grow through the 1950s and 1960s even as computers were being gradually introduced; but when the handheld electronic scientific calculator was made widely available in 1974, the use of slide rules declined. My high school physics teacher actually used a slide rule in class quite often.

Source: BBC News (link)

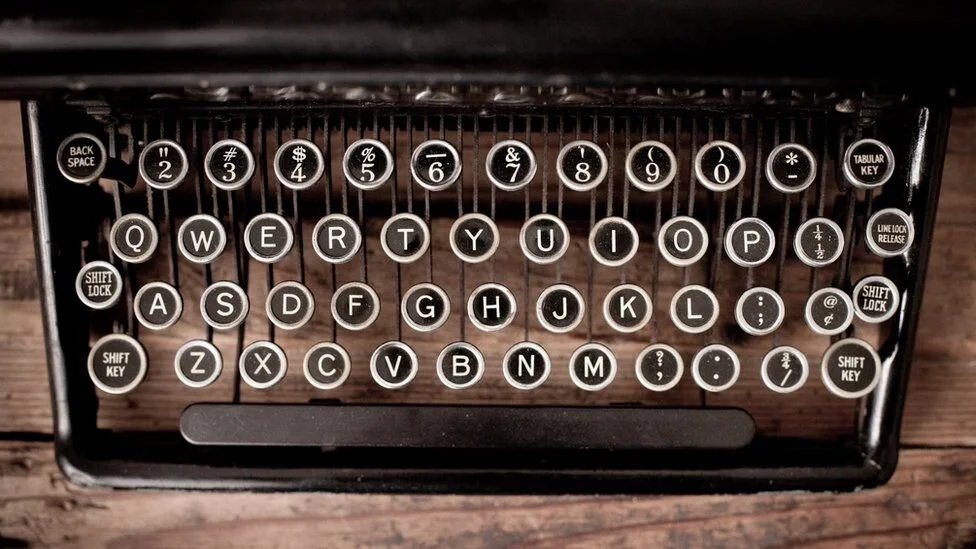

A component we use today on nearly every computer is the keyboard. The QWERTY keyboard layout was designed in 1873 by Christopher Latham Sholes for typewriters. This has become the standard English keyboard layout, though at the time of its invention, typewriters of different brands would often have different letter configurations.

Nowadays, computers can be programmed to perform custom functions. Charles Babbage, an English mechanical engineer and polymath, came up with the concept of a programmable computer. Babbage is considered the “father of the computer” because he designed a machine called the Analytical Engine that was the first Turing complete machine, which basically means it can be programmed to perform any logical expression. The machine was mechanical and would be able to read and print punch cards, which is how it performed calculations. Though he designed the machine in 1837, Babbage never fully built it due to financial reasons.

Of course I also have to mention the world’s first computer programmer, Ada Lovelace, who was Babbage’s contemporary and wrote the first program that could in theory be executed by his machine. About Babbage’s Analytical Engine, she said,

“It might act upon other things besides number, were objects found whose mutual fundamental relations could be expressed by those of the abstract science of operations, and which should be also susceptible of adaptations to the action of the operating notation and mechanism of the engine...Supposing, for instance, that the fundamental relations of pitched sounds in the science of harmony and of musical composition were susceptible of such expression and adaptations, the engine might compose elaborate and scientific pieces of music of any degree of complexity or extent.”

She said this about the potential of computers in the 1840’s, which is absolutely incredible, considering 150 years later computers really did gain the power to synthesize music and art.

Eventually, the word “computer” transformed from describing people to devices. In 1922, the New York Times began using the word computer to refer to electronic devices made for firing weapons on enemy ships.

By 1938, the United States Navy had developed an electromechanical analog computer small enough to use aboard a submarine. This was the Torpedo Data Computer, which used trigonometry to solve the problem of firing a torpedo at a moving target. During World War II similar devices were developed in other countries as well.

If you recall the previous episode, we briefly mentioned that the first modern computer was built by the British, led by Alan Turing, due to the need to crack German codes. Turing and his team built devices called bombes, that’s b-o-m-b-e, based on a Polish design, to efficiently crack messages encoded with the German Enigma machine. The bombes were basically running a brute force algorithm that tried all possible letter encodings, with the help of powerful heuristics, such as likely letter combinations and impossible encodings.

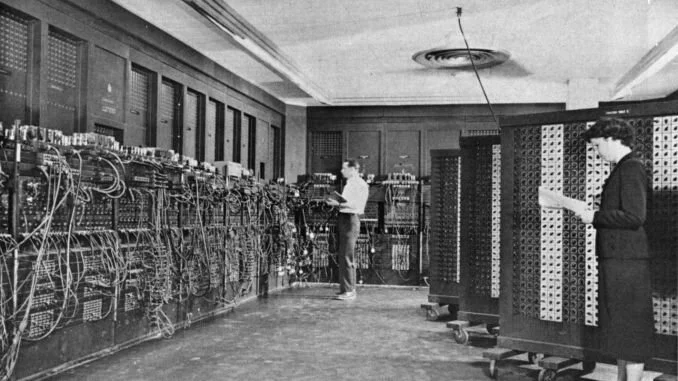

Around the same time, in 1942, the Atanasoff Berry Computer was created at Iowa State University. Then, in 1945, the ENIAC was built by the US Army, standing for Electronic Numerical Integrator and Computer. It was the first physical Turing complete machine built, and was also around 1000 times faster than any other machine at the time. It cost $500,000, or around $7 million today, which is not cheap but also not too bad if you think about the Army’s total budget.

Source: 24celebs.com (link)

I think it’s kind of cool to learn about where all our technology came from and how quickly it happened. There were over 3000 years between the invention of the abacus and the invention of the Analytical Engine, and only 200 years between that and the invention of the smartphone. The first Turing complete machine cost $7 million dollars, and now we can purchase a Turing complete smartphone for a few hundred dollars. One of my college professors at MIT told us a story about when he was an undergrad, the fastest supercomputer in the world was housed in a huge room and was extremely loud due to the mechanical moving parts. He and his classmates would walk past the room and just stare at the computer doing its work. Now that same room is used to teach freshman year physics, and a pocket-sized smartphone is many times more powerful than that supercomputer.

Part 3: Computers in the East (China)

Now we just talked about the history of computing in the West. What about computing in the East? China is very proud of its rich history and accomplishments in math and science, but while all that good stuff was developing in the US and Europe, a very different situation was taking place in China.

At a high level, a popular theory about why China was left behind in the computing race is that the Chinese population was large enough and workers cheap enough that the country didn’t need to industrialize to maintain high levels of productivity. This theory is known as Mark Elvin’s high level equilibrium trap. Other events, such as the Opium Wars and European colonization, also prevented China from industrializing.

In addition, China was undergoing a civil war that saw the deaths of hundreds of millions of people. This is the war fought between the Kuo Ming Tang, led by Chiang Kai-shek and Sun Yat-sen against the Chinese Communist Party led by Mao Zedong. The war began officially in 1927 and ended in 1949, when the Communists won.

Between this time, China’s scientists were unable to get steady support for their work, and many sought to flee overseas, where they stayed after their studies. WWII necessitated the development of computers because each side wanted to crack the others’ codes. The Chinese Civil War was fought with sheer numbers and brute strength, and science was actually rejected. There is a commonly told story about Mao ordering farmers to kill all birds to prevent crops from being eaten, but instead resulting in even more famine because there was nothing left to eat the bugs that really harmed the crops.

When Mao finally did win the war in 1949, he aligned himself with the Soviets, which enabled China to catch up and take part in the nuclear weapons race. Mao’s successor Deng Xiaoping ordered science and technology to become one of the Four Modernizations, meaning its development was of top importance to the national economy. Though China was still vastly agrarian, science and technology was used to aid it, such as the creation of high-yield rice. In the 1980’s China began aggressively importing technology, such as electronics, communication devices, and transportation devices.

The Chinese students who studied abroad and opted to return home were met with skepticism and prejudice. Confucianism was still a dominating doctrine, and Eastern scholars and politicians rejected Western beliefs, such as individualism, freedom of speech, and democracy. The Chinese Communist Party kept a strict hold over which Western studies and ideas were acceptable (and necessary for China to be a world player) and which were not. This line of thinking wasn’t only prevalent in China either -- Japan made it illegal to use English terms in baseball, a game they learned from Americans and came to love.

Source: Observer (link)

Between 1979 and 1986, China sent over 35,000 students abroad, 23,000 of whom went to the United States. Interestingly, China’s richest person, Zhong Shanshan, was an elementary school dropout during the Cultural Revolution. He owns China’s biggest bottled beverage company. China’s second and third richest people are Jack Ma and Pony Ma, who were both educated in Chinese universities in the late 1980’s and early 1990’s. They are not related, but they are the CEOs of Alibaba and Tencent, which are China’s biggest tech companies, and two of the biggest tech companies in the world.

Part 4: The language of computers

To me, this is a partial explanation of why English is the dominant language of computing. The pioneers spoke English, and a large number of programmers around the world were educated in American and British universities. When international students went back to their countries of origin, they brought back what they learned in English, and for the sake of ease of communication across languages, people used English as the common tongue.

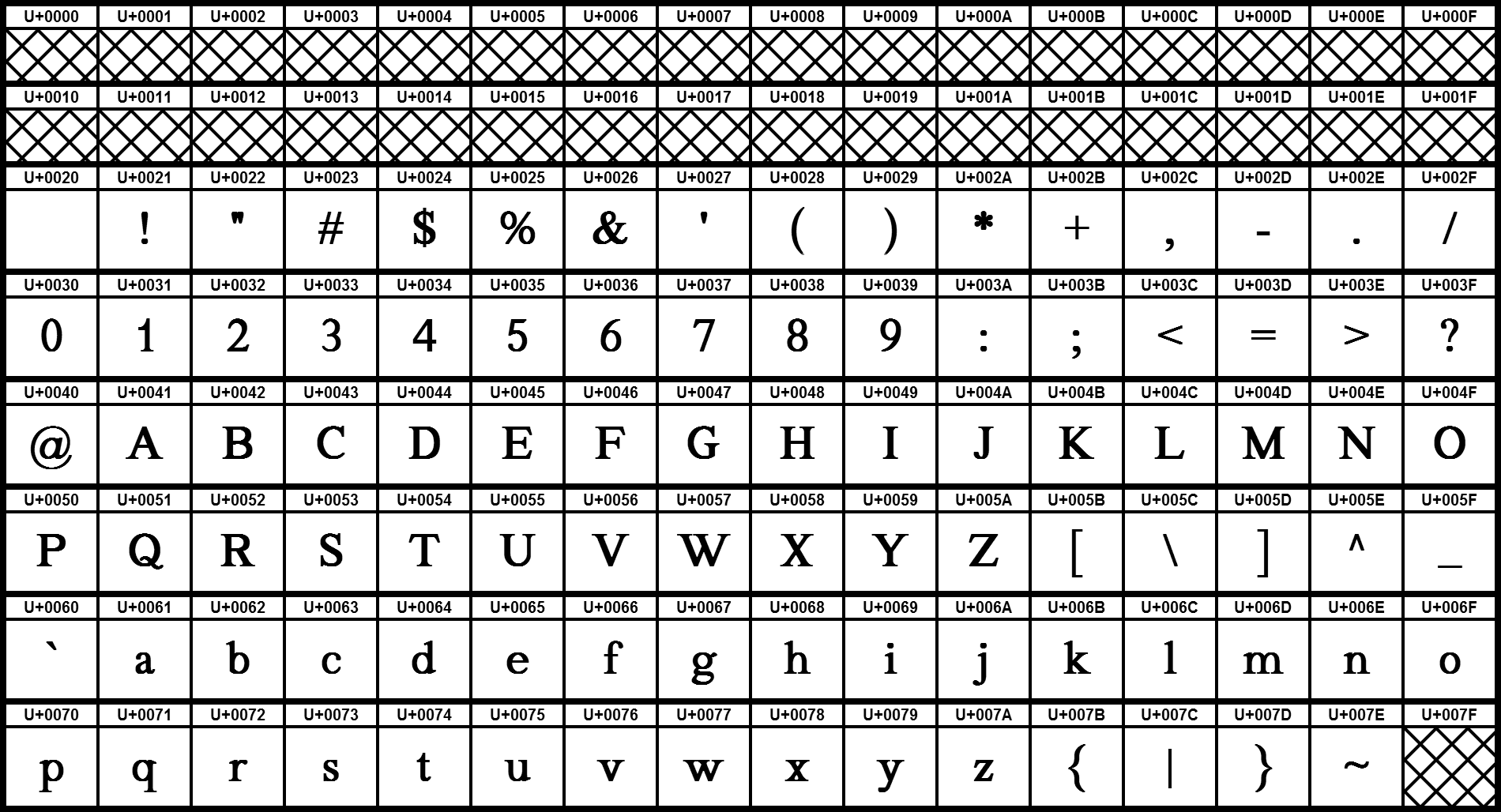

In the previous episode we learned about Unicode, the language of computers. Does it surprise you to know that the first block of characters in Unicode consists of Latin characters? Or in other words A-Z? All the characters required to write English are included in the first block of Unicode. There is a practical reason to use Latin characters though -- There are only 26 letters. The major distinction we need to make first is between script and language. English is a language that is written using the Latin script. Other languages also use Latin characters, such as Spanish and French.

English is by far the most space efficient language, because the first 128 characters of Unicode, which encompass all Latin characters required to write English, can be encoded with 1 byte. The rest of the Latin-based scripts and many other scripts, that would write languages like Hebrew and Arabic, can be encoded with 2 bytes. The CJK characters, which can write Chinese, Korean, and Japanese, need 3 bytes.

Source: Wikiwand (link)

Over a third of programming languages were developed in English speaking countries. But some of the well-known, highly-used languages were developed in non-English speaking countries e.g. Switzerland (PASCAL), Denmark (PHP), Japan (Ruby), and The Netherlands (Python).

Hardware is another reason why English is so predominant in programming languages. With a standard QWERTY keyboard, it’s natural to use English keywords when programming. If you recall, QWERTY was designed in 1873 for a typewriter, and many people speculate that this configuration of characters is optimal for fast typing. What’s interesting is that to this day we use it, even on our phones, where most people type with their pointer fingers or their thumbs.

Non-Latin languages often have adapted ways to type their characters on a QWERTY keyboard, where the keys labeled as “ABC” map to strokes, and pressing multiple keys in a row will type out one well-formed character. Even as native English speakers, we can relate to this experience. In the 2000’s before smartphones, we used to text each other on a 10-digit number pad, pressing the 2 button once to get ‘A’, twice to get ‘B’, and three times to get ‘C’. This is a major throwback for me, but if it’s possible for an entire generation to learn this method of typing, surely it’s possible that QWERTY keyboards will no longer be used in the future.

So in short, English is perhaps the best language to use to write programs because it is the most space efficient and quickest to type.

Part 5: The impact of English and English loan words in other languages

I grew up and went to school in the US and I currently work at an American tech company, so I interviewed one of my colleagues, David, who started his career in Taiwan as a software engineer. I was hoping to gain some insight about what working in the software industry is like in countries where English not the primary language spoken. This is what David had to say about his experience:

I started to learn how to code in high school with peer mentorships, with some limited material available in Traditional Chinese, however it's only enough to get people started. When I was in college, our textbooks and reference materials were mostly English, while the classes were still instructed in Mandarin. One thing I noticed which is a big difference between mainland China vs Taiwan, is people from China would translate all terminologies to Chinese, which made it possible to have discussions entirely in Chinese. But in Taiwan only some of them got translated, meaning in discussions you'll see English words being used directly, e.g. data structure names like tree, stack, and queue. Sometimes it depends on whether there's already a commonly used word. For example, tree would usually get translated to the Chinese word for tree, but DFS (depth first search), people just say DFS.

In general students start to learn English from middle school and English comprehension is part of the college admission exam, hence it's usually not an issue in college to have courses taught in English. But for high school students trying to learn programming, they struggle more. Some forums, like the equivalent of Stack Overflow, use Chinese for discussions, but all technical terms are usually in English. In code, people generally name variables in English but sometimes you'll see funny variable names, like “$wtf1” and “$wtf2”. Chinese programmers often use conventional English variable names, but they’ll comment on the side in Chinese characters as an ad-hoc translation of what the variable does.

There are huge differences in industry culture. In general, it's hard to find a good software engineer job in Taiwan due to the industry being a bit more hardware focused, but it's getting better in the past 5 years with more successful software startups. We use English for emails too, despite the fact that we usually speak in Chinese face-to-face, mostly because it is easier to use that same language when typing. Typing in CJK, the input method for Chinese, Japanese, and Korean characters, is usually very slow.

Much of what David said corresponds with what we know about computer technology and how it developed in China. English is being taught in primary and secondary schools around the world now as a required language, and many jobs require some English proficiency. I think it’s interesting that mainland China tends to invent its own terms for concepts in English, because it is similar to how Japan wanted to prevent Western ideologies from taking hold by forbidding the use of English baseball terms. Even though we as individuals might not have a horse in this race, the practice of inventing terms in that country’s own language is something that still affects us individually.

It’s also sort of funny to me that even though a common programming tenant is to use self-descriptive variable names, that programmers whose first language is Mandarin would use nonsense variable names and just make a comment about what this variable actually represents. It goes to show that English as the primary language of programming is not sufficient to enable all people to communicate their ideas. It puts people who don’t speak English at a distinct disadvantage.

I read through a few online forums that discuss programming in non-English languages where software engineers share very similar experiences.

For instance, one Spanish user said

“Spain has a traditional problem with foreign languages. Spaniards younger than 40 are supposed to know English from school but the plain fact is that the level of English is close to zero almost everywhere. There are basically two types of software environments: code that's supposed to be shared with international parties (open source projects, Spanish offices of foreign multinationals, vendors who sell abroad) and code that's sold locally. The former is of course written in English but the latter is normally written in Spanish, both variable names and documentation. Words in variables lose accents and tildes as required to fit into 7-bit ASCII (dirección -> direccion) and English bits may be used when they represent a standard language feature (getDireccion) or a concept without an universally accepted translation (abrirSocket). Sometimes removing tildes changes the meaning of a word, and many programmers intentionally spell words wrong to avoid typing something offensive.”

A French programmer related,

“In France, many people tend to code using French objects/methods/variables names if they work with non English speaking colleagues. However, it really depends on your environment. The rule of thumb is, 'the more skilled people you are working with / the projects you are working on are, the more likely it is that it is going to be in English’.”

A Polish programmer agreed,

“Many developers in Poland prefer to get English documentation rather than Polish translation and the reason for that is that translations were not always accurate. Even Microsoft developer documentation was translated partially or with errors, so reading original English documentation was easier than English-Polish soup.”

Part 6: The future of programming

All this is to say, English continues to be used around the world because it standardizes communication amongst all groups of developers. If a Chinese developer and a Spanish developer were to create a startup together, they’d use English to communicate. If a French engineer created a new software package, they’d write up the documentation in English. It seems like using English is inevitable. But one thing we touched on briefly was the impact this has on people who are just starting out.

Source: Open Source (link)

Scratch is a blocks-based programming language, and it is one of the only programming languages that is localized. Blocks-based programming languages are popular for teaching children how to program, because they offer a visualization for instructions like loops and conditionals, and have a plug-in, drag-and-drop functionality. Scratch developers conducted a study with groups of children who spoke English as their native language and those who spoke another language as their first language. The researchers then taught children Scratch, some with English and some with their native language. The researchers found that children who learn Scratch in their native language, compared to English, are much faster to gain proficiency. The children are able to understand what each component does and to use each component correctly much more easily when the components are named in words they already understand.

What do you think this implies for the future of programming? As computers become more prevalent in all parts of the world, so will computer engineers. I’d love to hear your thoughts, so feel free to leave a comment. Thanks for listening, and I’ll catch you on the next episode.

M

Citations

Atwood, Jeff. “The Ugly American Programmer.” Coding Horror, Coding Horror, 29 Mar. 2009, blog.codinghorror.com/the-ugly-american-programmer/.

“Computer (n.).” Index, www.etymonline.com/word/computer.

“Computer.” Wikipedia, Wikimedia Foundation, 26 July 2021, en.wikipedia.org/wiki/Computer.

“The Curious Origin of the Word 'Computer'.” Interesting Literature, 25 Jan. 2020, interestingliterature.com/2020/02/origin-word-computer-etymology/.

“Do People in Non-English-Speaking Countries Code in English?” Software Engineering Stack Exchange, 1 Feb. 1959, softwareengineering.stackexchange.com/questions/1483/do-people-in-non-english-speaking-countries-code-in-english.

“Eniac.” Wikipedia, Wikimedia Foundation, 4 July 2021, en.wikipedia.org/wiki/ENIAC.

Hill, Benjamin Mako. “Learning to Code in One's Own Language.” Copyrighteous, 27 June 2017, mako.cc/copyrighteous/scratch-localization-and-learning.

McCulloch, Gretchen. “Coding Is for Everyone-as Long as You Speak English.” Wired, Conde Nast, 8 Apr. 2019, www.wired.com/story/coding-is-for-everyoneas-long-as-you-speak-english/.

Metz, Rachel. “Why We Can't Quit the Qwerty Keyboard.” MIT Technology Review, MIT Technology Review, 2 Apr. 2020, www.technologyreview.com/2018/10/13/139803/why-we-cant-quit-the-qwerty-keyboard/.

“Non-English-Based Programming Languages.” Wikipedia, Wikimedia Foundation, 31 July 2021, en.wikipedia.org/wiki/Non-English-based_programming_languages.

“Qwerty.” Wikipedia, Wikimedia Foundation, 3 Aug. 2021, en.wikipedia.org/wiki/QWERTY.

“r/ProgrammerHumor - Do People in Non-English-Speaking Countries Code in English?” Reddit, www.reddit.com/r/ProgrammerHumor/comments/2ilr0i/do_people_in_nonenglishspeaking_countries_code_in/.

Sakisaka, Nana. “Reality of Programmer's English Skill in NON-ENGLISH-SPEAKING COUNTRIES?” DEV Community, DEV Community, 16 Nov. 2017, dev.to/saki7/reality-of-programmers-english-skill-in-non-english-speaking-countries-a4j.

“Science and Technology in China.” Wikipedia, Wikimedia Foundation, 11 July 2021, en.wikipedia.org/wiki/Science_and_technology_in_China#Electronics_and_information_technology.

“Slide Rule.” Wikipedia, Wikimedia Foundation, 13 July 2021, en.wikipedia.org/wiki/Slide_rule.

Vocabularist, The. “The VOCABULARIST: What's the Root of the Word Computer?” BBC News, BBC, 2 Feb. 2016, www.bbc.com/news/blogs-magazine-monitor-35428300#:~:text=%22Computer%22%20comes%20from%20the%20Latin,to%20think%20and%20to%20prune.&text=In%201660%20Samuel%20Pepys%20wrote,a%20person%20who%20did%20calculations.

Y Studios. “INSIGHTS: Passion: Y Studios - the Language of Codes : Why English Is the Lingua Franca of Programming.” Y Studios, Y Studios, 5 July 2019, ystudios.com/insights-passion/codelanguage#:~:text=Over%20a%20third%20of%20programming,and%20The%20Netherlands%20(Python).

김대현 . “언어.” 한글코딩, xn--bj0bv9kgwxoqf.org/%EC%96%B8%EC%96%B4.html.